- Install apache spark over hadoop cluster install#

- Install apache spark over hadoop cluster password#

- Install apache spark over hadoop cluster download#

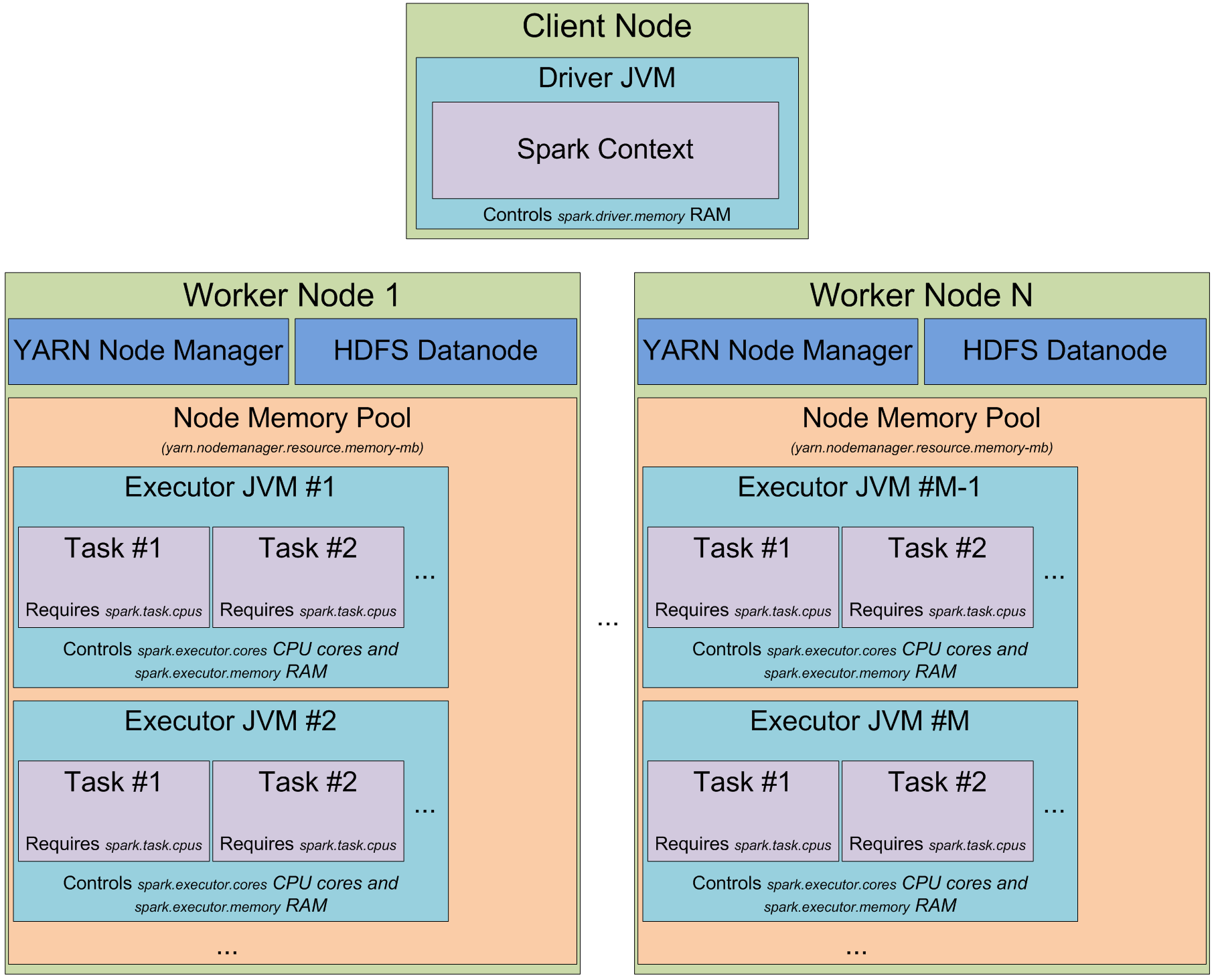

How much memory a single container can consume and the minimum memory allocation allowed. This value is configured in yarn-site.xml with -mb. However, it should not be the entire amount of RAM on the node. This limit should be higher than all the others otherwise, container allocation will be rejected and applications will fail. How much memory can be allocated for YARN containers on a single node. The whole cluster is managed by a ResourceManager that schedules container allocation on all the worker-nodes, depending on capacity requirements and current charge.įour types of resource allocations need to be configured properly for the cluster to work. Each worker node runs a NodeManager daemon that’s responsible for container creation on the node. For a MapReduce jobs, they’ll perform map or reduce operation, in parallel.īoth are run in containers on worker nodes. Some executors that are created by the AM actually run the job.An Application Master (AM) is responsible for monitoring the application and coordinating distributed executors in the cluster.The Memory Allocation PropertiesĪ YARN job is executed with two kind of resources: This section will highlight how memory allocation works for MapReduce jobs, and provide a sample configuration for 2GB RAM nodes. Memory allocation can be tricky on low RAM nodes because default values are not suitable for nodes with less than 8GB of RAM. Edit /home/hadoop/.profile and add the following line:

Install apache spark over hadoop cluster download#

Log into node-master as the hadoop user, download the Hadoop tarball fromĪdd Hadoop binaries to your PATH.

Paste your public key into this file and save your changes.Ĭopy your key file into the authorized key store. In each Linode, make a new file master.pub in the /home/hadoop/.ssh directory. View the node-master public key and copy it to your clipboard to use with each of your worker nodes.

Install apache spark over hadoop cluster password#

When generating this key, leave the password field blank so your Hadoop user can communicate unprompted. Login to node-master as the hadoop user, and generate an SSH key: ssh-keygen -b 4096 This will allow the master node to actively manage the cluster. The master node will use an SSH connection to connect to other nodes with key-pair authentication. Don’t forget to replace the sample IP with your IP:ĭistribute Authentication Key-pairs for the Hadoop User

The steps below use example IPs for each node.

Install apache spark over hadoop cluster install#

Ubuntu, or install the latest JDK from Oracle. Install the JDK using the appropriate guide for your distribution, SSH keys will be addressed in a later section.

Create a normal user for the Hadoop installation, and a user called hadoop for the Hadoop daemons. Securing Your Server guide to harden each of the three servers. Run the steps in this guide from the node-master unless otherwise specified.Īdd a Private IP Address to each Linode so that your Cluster can communicate with an additional layer of security. It is recommended that you set the hostname of each Linode to match this naming convention. They’ll be referred to throughout this guide as node-master, node1, and node2. Getting Started guide to create three (3) Linodes. It is composed of the Hadoop Distributed File System (HDFS™) that handles scalability and redundancy of data across nodes, and Hadoop YARN, a framework for job scheduling that executes data processing tasks on all nodes. Hadoop is an open-source Apache project that allows creation of parallel processing applications on large data sets, distributed across networked nodes.

0 kommentar(er)

0 kommentar(er)